January 10, 2023 / by Adam Jones Estimated read time: 6 minutes

Is Character Encoding Still Relevant?

Ten years ago, SimulTrans published an article on the "Relevance of Character Encoding." Then, character encoding was a critical challenge for many developers. Is it still important?

Encoding settings still can make or break a software or website localization project, the difference between intelligible text in a functional application and broken software punctuated with unusual glyphs. While much less of an issue today, character encoding settings remain essential.

As we previously explained in a separate two-page encoding summary document:

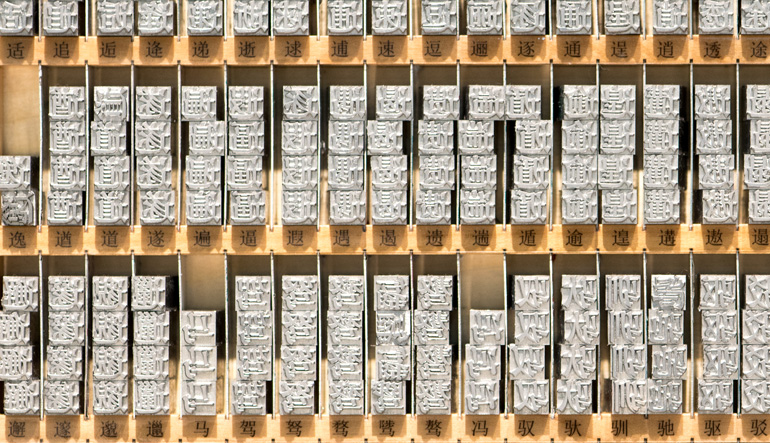

Character encoding is the organization of the set of numeric codes that represent all the meaningful characters of a script system in memory. Each character is stored in memory as a number. When a user enters characters, the user's keypresses are converted to character codes; when the characters are displayed onscreen, the character codes are converted to the glyphs of a font. Character encoding is matching the binary representation of a character with the printed character based on a table.

There used to be frequent consideration of which encoding was most appropriate to use for any particular project, operating system, and language. From ANSI and Extended ASCII to Shift-JIS and Big-5, we were required to make specific encoding choices to maximize the display of characters. Encoding was the reason that Macs had degree symbols while Windows had fractions (making it impossible to refer to 68½° on either operating system in their early years). Discrete encoding choices made displaying combined Chinese Hanzi and Japanese Katakana almost impossible.

Fortunately times changed with the 1991 advent of Unicode, the all-encompassing encoding standard designed to cover all the world's living languages. As of 2023, the latest version includes 161 scripts containing a total of 149,186 characters.

Almost all software resource files and webpages are now encoded in UTF-8, a variable-length character encoding standard that can encode each of the valid Unicode code points in one to four bytes. The text you are reading now was encoded using UTF-8. If you viewed the HTML source of this article, you would find the following line of code:

<meta charset="utf-8">

This encoding is the reason you can see Arabic, Japanese, and all the other characters in the language selector, view opening and closing quotation marks, and even comprehend that odd fractional temperature referred to above—all on one page. For example, Unicode encodes the characters in the SimulTrans logo in the following ways:

| Description | Oct | Dec | Hex | HTML | |

| à | latin small letter a with grave | 0340 | 224 | 0xE0 | ` |

| 字 |

cjk unified ideograph 5b57 |

055527 | 23383 | 0x5B57 | 字 |

| π | greek small letter pi | 01700 | 960 | 0x3C0 | π |

Fortunately, you don't need to worry much about character encoding anymore. Once you choose UTF-8, most operating systems, browsers, editors, databases, and other systems that store and process text take care of the rest.

If your characters appear to be corrupted, you see a bunch of missing or added accent marks, or you find text is replaced by rectangles, it's likely you are facing an encoding problem. Changing settings to use UTF-8 will likely be the magic fix.

Written by Adam Jones

As President and COO of SimulTrans, Adam manages and supports the company worldwide. He has spent over 30 years helping customers launch products and content internationally. Adam graduated from Stanford University, where he studied Public Policy with an emphasis on Education.